Google Summer of Code with MRPT

August 12, 2018

This blog post chronicles my progress in working towards a GUI application for the automatic extrinsic calibration of range and visual sensors with Mobile Robot Programming Toolkit (MRPT) as a part of Google Summer of Code 2018.

Project mentors: Eduardo Fernandez-Moral, Jose Luis Blanco-Claraco, Hunter Laux

Project repository: autocalib-sensor-extrinsics

Student Application Period

March 12th - March 27th

When the official list of participating organizations was accounced for this year’s program I was really excited to find MRPT’s project idea for a complete GUI app for extrinsic calibration. I had been working on the extrinsic calibration of 3D LiDARs and cameras for about six months until this point so I could really appreciate the need for such an app. Only a few extrinsic calibration packages are available as open source at this point and they’re all mostly just research prototypes. They are not automatic either and still require significant human intervention. A complete GUI app with different automatic extrinsic calibration algorithms integrated into it, that could operate on natural scenes could be very useful and save a lot of efforts. I immediately wanted to start working towards to this project but however I wasn’t actually sure if I will be eligible to participate in the program since I wasn’t officially enrolled as a student anywhere (yet). But I still proceeded with the idea hoping that that would somehow get sorted out in time.

I introduced myself to the project mentors and I discussed with them about the deliverables of the project - the different kinds of sensors that the app should be able support and the different algorithms that should be implemented. They wanted virtually all kinds of sensors to be supported - 3D LiDARs, 2D LiDARs, RGB-D cameras and RGB cameras - and three different algorithms - based on plane-matching, line-matching, and trajectory-matching - to be implemented. It seemed very ambitious and I wasn’t sure if I could do all of this in three months but they assured me that it can be done. Eduardo, one of the mentors, had co-authored two of the algorithms so he would also be able to help me with the code. With this, I drafted a plan with weekly goals and project milestones, and together with a very minimal GUI skeleton that I coded up using Qt, I submitted my proposal.

My full project proposal can be found here.

Thankfully, my eligibility eventually got sorted out and my project also got accepted. Now onto the rewarding part!

Community Bonding Period

April 23rd to May 14th

During the bonding period I wanted to settle into the MRPT community and get comfortable with all its libraries and coding practices and get myself ready for the official coding period.

My mentors welcomed me into the community and helped me start out by setting up the project structure with all the necessary CMake scripts. I earlier used to get away with having just a single CMakeLists.txt script in my projects so I needed some time to get comfortable with all these new scripts and compiler options and internalize them. My mentors also pointed me to some existing projects within the MRPT repository for me to read as good examples for GUI design using Qt, and for plane and line extraction.

I also spent some time lurking in the main repo’s issues thread and the previous years’ GSoC projects issues thread to see how things are done. I felt my cpp skills had gotten a little rusty so I spent some time reviewing some cpp while also learning more about the Qt framework like the Model-View architecture, the Signals & Slots mechanism, Object Trees, and the QWidgets module - all of which I needed for my project.

I built the MRPT libraries from source, ran a few examples and in-built apps. I read through some of their API documentation pages and their coding style guide. I also had to configure my Vim and QtCreator to adopt their coding style since they prefer tabs over spaces ;)

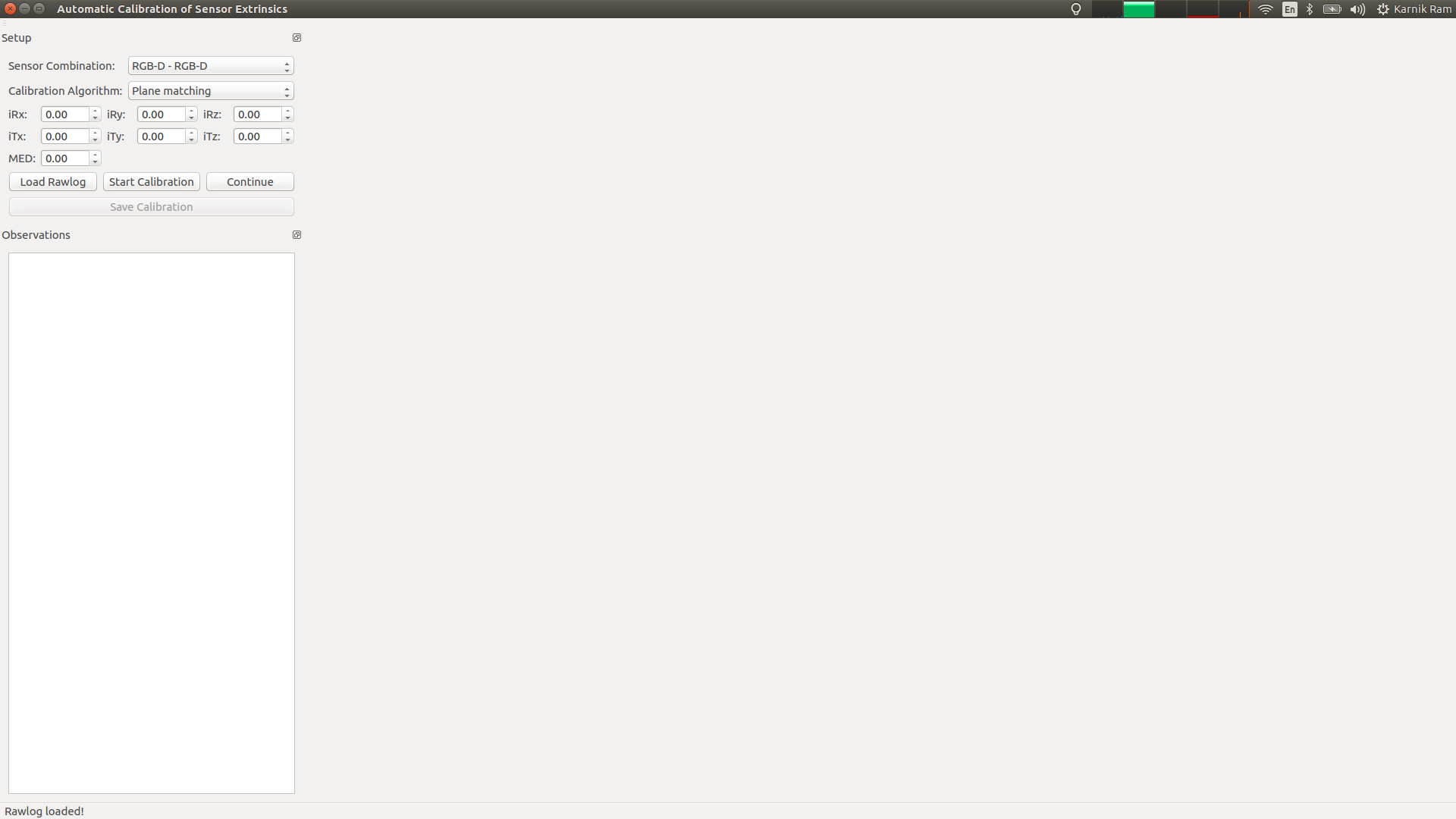

Once I felt reasonably comfortable and settled in, I resumed my work on the GUI which I had started during the application period. I decided to change the layout of the setup widget to distribute more space to the visualization widgets. I also decided to setup a QTreeView for listing all the observations in the dataset as a tree and to interact with them. I added both the widgets as QDockWidgets for more flexibility. The user can now use the app to select the sensor combination to calibrate, the calibration algorithm, and select the dataset to load. The app looks like this:

Coding period

Week 1:

May 14th - May 20th

I started off by working on loading rawlog files into the app. As decided earlies, I wasnted to load all the observations into the QTreeView for which I needed to use an intermediate tree model as an interface to the QTreeView and store all the observations in a tree data structure. So I spent this week writing the CObservationTreeModel and CObservationTreeItem classes and methods for deserializing and loading the observations into this model as tree items. Qt’s Model-View architecture seemed intimidating at first but I eventually got around it. At this point, I realized the amount of work actually involved in setting up the GUI and it became clear that I wouldn’t be able to follow the proposed timeline with respect to the GUI strictly.

Week 2:

May 21st - May 27th

Once the observations would load into the tree properly, I proceeded to work on the visualizers. I setup all of the visualizers and put all of them together into a single container and seperated them using QSpliters for display flexibility. I used PCL’s PCLVisualizer class for the visualizers since I could easily integrate them with Qt as QVTKWidgets. Each visualizer ran in its own thread which also made things easier. I added a QTextBrowser for displaying the observation description, application status, and results. I ran into some CMake issues in QtCreator which caused it to not load some source files, even though I could run them outside of it. Switching between two IDEs - Vim and QtCreator made things messy so I had to spend some time refactoring and cleaning my source files and CMake scripts.

Week 3:

May 28th - June 3rd

This week, I started off by making more changes to the layout properties of the visualization widgets for better clarity. The QSplitters UI still seemed to mysteriously break whenever the window size is minimized which was frustrating.

I then linked the observation tree to the visualizers, so when the user clicks on any observation item or any observation set, the observation is visualized in the corresponding PCLVisualizer and its description is displayed in the QTextBrowser. I also contemplated using Boost.Signals library for sending updates from the core algorithms to the QTextBrowser, but proceeded to simply pass a pointer to the MainWindow ui to every core algorithm, in the interest of time.

I then started to work on implementing the plane-matching algorithm for calibration, for which I needed to first implement plane segmentation. Following PbMap as an example, I used PCL’s OrganizedMultiPlaneSegmentation method for this but I ran into some type incompatibility issues.

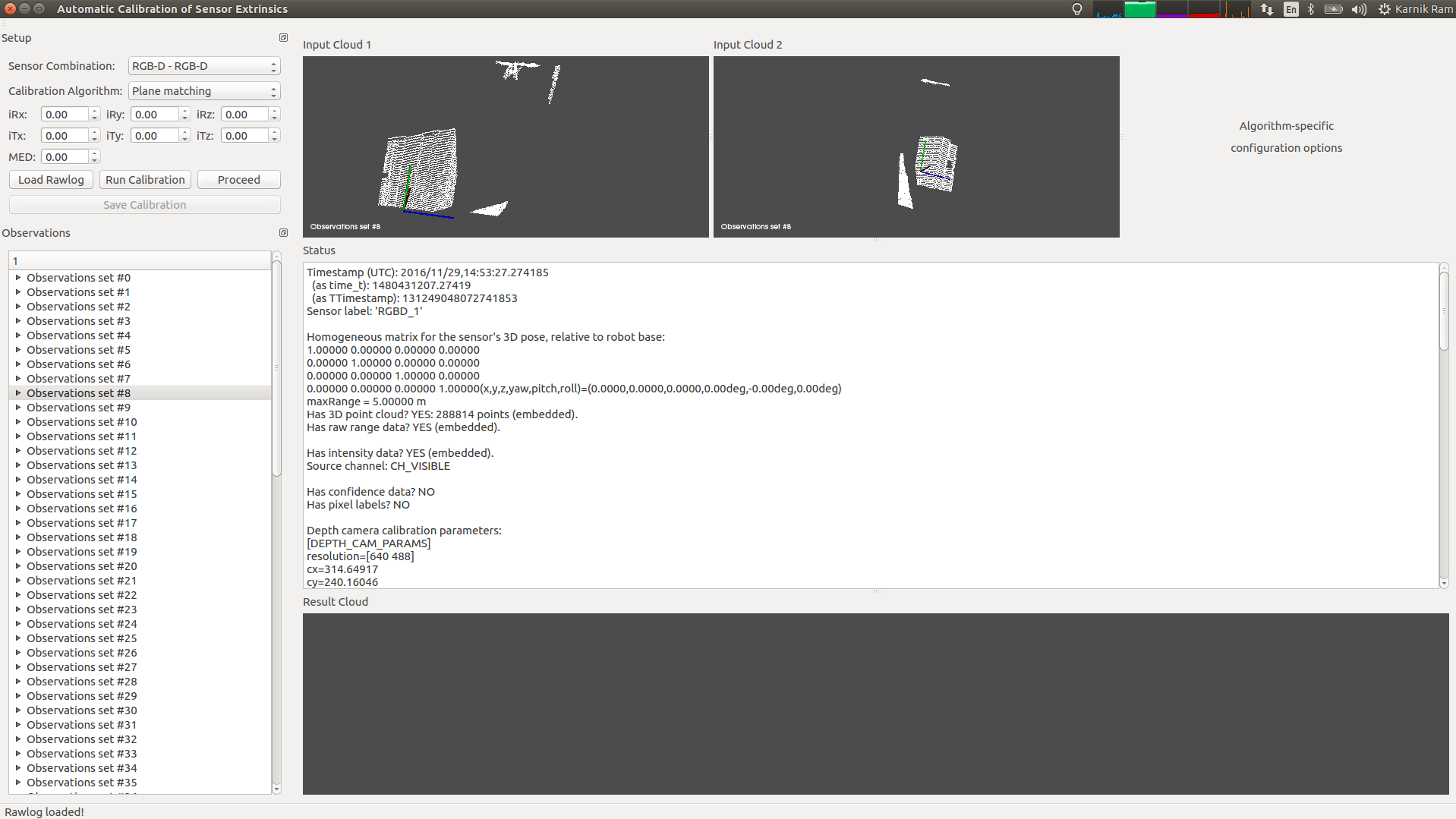

At the end of week 3 the app looks like this:

Week 4:

June 4th to June 10th

This week I further investigated the type incompatibility issue and it turns out the point clouds generated using either of the MRPT methods - project3DPointsFromDepthImageInto and CPointsMap::getPCLPointCloud - return unorganized point clouds even when organized is requested for. So I started digging into the sources and made the changes to add a new method ‘setDimensions’ method, and a ‘MAKE_ORGANIZED’ parameter to T3DPointProjectionParams.

I also worked on making some GUI changes to input the configuration parameters within the app, as a separate widget that can be dynamically replaced depening on the algorithm chosen. But this led to an ugly cyclic inclusion problem since I’d already passed a pointer to the main window to the core classes, and now I’m including the same core classes inside the main window. So I then proceeded to refactor things to simply pass a function object, containing a bind object to the viewer update methods, to the core classes for receiving status updates as suggested by Jose.

Week 5:

June 11th to June 17th

This week, with all the new changes, I proceeded to test plane segmentation again but pulling in the latest MRPT changes, which bumped the minimum C++ standard version required to C++17, broke my build. After some digging together with Jose, it turned out that Qt 5.5 (the default version of Qt in Ubuntu 16.04) doesn’t support nested namespaces introduced in C++17. The support was added in Qt 5.9 so I installed Qt 5.9 but strangely could not get our project to use it. As a work around, I used Qt’s preprocessor symbol - Q_MOC_RUN - to make the Qt compiler ignore the non-Qt header files that use nested namespaces.

After all these changes, organized point clouds were being generated properly and plane segmentation also worked. I then worked on passing these segmented planes to the viewer classes to visualize these segmented planes.

Week 6:

June 18th to June 24th

This week I continued to work on visualizing the segmented planes. But passing the planes and clouds to and fro between the main window classes and core classes led to a tightly coupled design. Eduardo pointed this out and upon disuccsion it was decided to implement the observer pattern. Each of the core classes (like CCalibFromPlanes) maintains a list of observers (like the viewer) and notifies them automatically upon changes through a common interface, enforcing abstraction. The observers register with the subject without any tight coupling.

After these changes I was then able to properly visualize the segmented planes, overlayed with the clouds, in the viewer. But somehow the RGB color information of the clouds was lost and was not being shown, even though the planes had them.

Week 7:

June 25th to July 1st

This week I worked on fixing some bugs related to linking the right planes to the right clouds during visualization, and cleaning all the changes I made to the MRPT PointCloudAdapter classes in order to properly generate organized point clouds from range images, and submitted them as a pull request.

I also moved my development environment to a new server PC provided by my lab that has a faster processor and a little more RAM, to improve my workflow and save some time in the long run. I used the opportunity to switch to Ubuntu 18.04 with the latest PCL 1.8 binaries, and Qt 5.9 framework which also solved the previous issue. Work done was relatively less this week since I was sick, and my mentor was also unavailable, as he was attending a conference, to discuss about integrating the code for the data association and calibration solvers.

Week 8:

July 2nd to July 8th

Just as I got back to work this week, PCL threw a strange segmentation fault which did not occur earlier. An issue was raised, and I tried compiling PCL 1.8 from sources against a different version of C++ as suggested by my mentor but the build process demanded way more memory resources than what my system has. I also tried forcing PCL to use a different version of Eigen, as suggested in a few places but that caused other problems. Luckily I soon got a reply on the issues thread, turns out the latest PCL binaries were compiled without the ‘-march=native’ flag, so removing that from our project worked. But that suddenly caused MRPT to segfault upon loading the rawlog. Building MRPT again in Debug mode seemed to fix it.

I then made improvements for loading the rawlog by presenting a progress dialog and by also displaying the stats of the loaded rawlog. Eduardo sent me some of his code files for data association and calibration that he had used for getting the results in his papers, I spent some time understanding them. I also setup some classes for calibration from lines and started with 2D line segmentation. Two image viewer widgets were also added for visualizing the images corresponding to the observations along with the clouds.

Week 9:

July 9th to July 15th

With the pressure of the second evaluations, I had to work extra this week to catch up with the proposed project timeline. I started off by making changes to the observation grouping algorithm. Earlier I was grouping based on their order in the rawlog file, but the right way would be to instead group them based on their timestamp proximity to each other. This was implemented and tested for rawlogs with more than two sensors, along with the GUI changes for selecting the sensors to be grouped, inputting the maximum allowable offset, and for displaying the grouped observations in a treeview separate from the original.

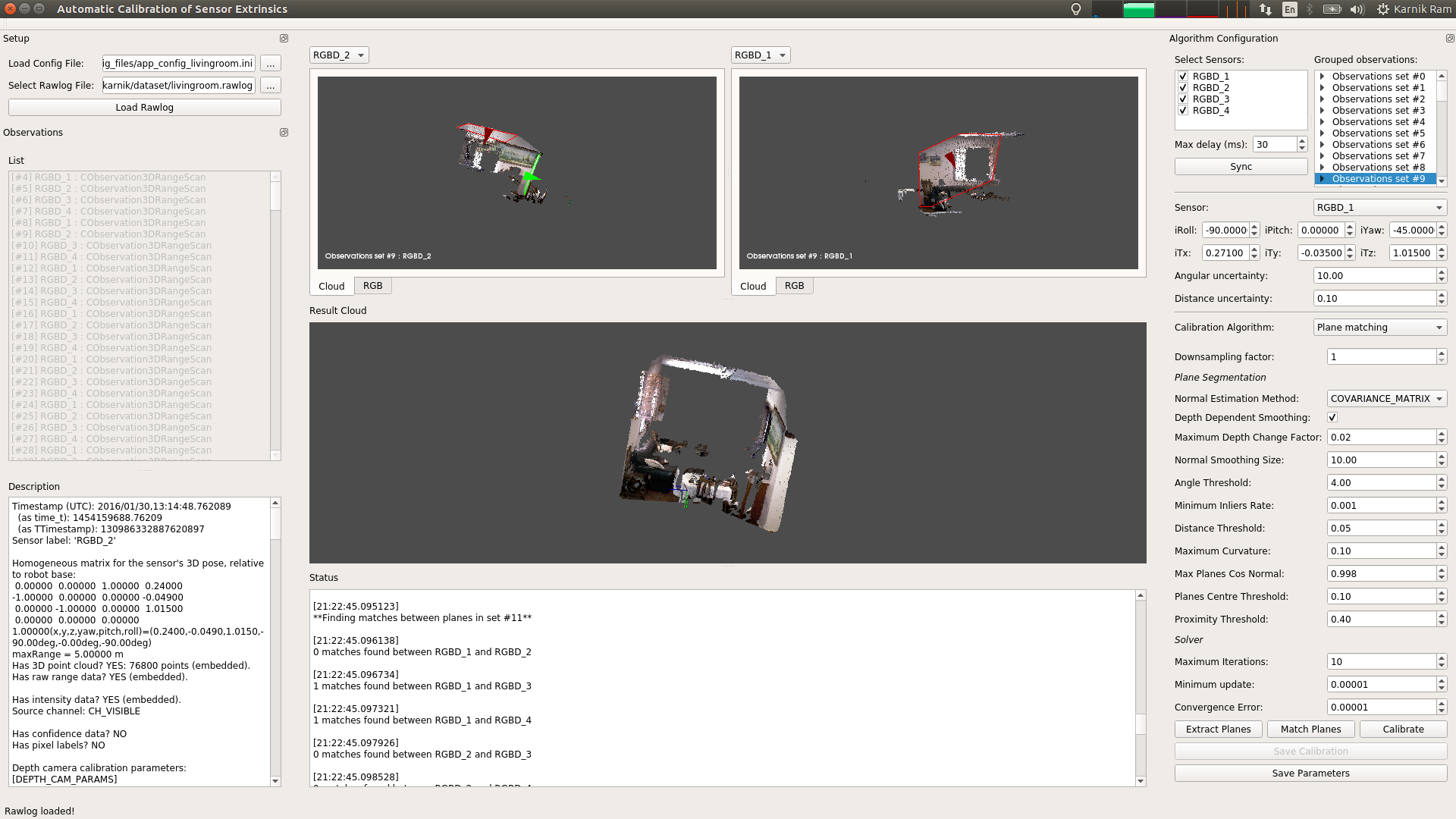

I then did some refactoring to better abstract the core calibration classes from the gui classes by using an inheritance pattern. Eduardo also helped with this by creating some core classes like CExtrinsicCalib, which served as the base for all other core classes. He also added methods for the rotation solver from plane correspondences, fixed the point cloud color visualization bug, and helped with some refactoring for visualizing the segmented planes individually in the viewers. I then added functionality to load an app configuration file, with all the parameters for the calibration, and editing the parameters. I also worked on visualizing the observations in a set together, using the initial calibration values, in the main viewer. Doxygen was also setup for this project, with some initial comment blocks.

I also tried launching the core algorithms in a separate thread, but that doesn’t allow publishing the status messages to the viewer with the current project structure.

At the end of the second evaluation the app looks like this:

Week 10:

July 16th to July 22nd

I began this week by fixing the issue of linking the right clouds and planes to the viewers from the new synchronized model upon clicks. Eduardo then pointed out a major issue in the design of the app where the model and the loading of the rawlog are tightly coupled to Qt classes. With the aim of being able to run the core classes without the GUI I worked on refactoring the model classes by creating non-Qt tree classes for loading and synchronizing the observations, which the Qt model classes inherit from for display and user interaction.

This took about three days to get things to work as they were before after which I worked on displaying just the contour of the extracted planes and their normals for better display clarity. I then made changes to load the configuration file and its parameters for the calibration from within the app before loading the rawlog file. I also tried to load, display, and edit the initial calibration values within the GUI but the SO(3) to so(3) coversion using MRPT’s CPose3D is causing a crash.

Week 11:

July 23rd to July 29th

This week I first wrote code and made GUI changes to implement the plane matching algorithm. The extracted planes from the sensor observations in the same set are matched by applying normal and distance constraints on them. I also wrote code to then visualize these matched planes within the main viewer pair-wise, by showing the matched planes in the same color, along with their observations. I then adapted my mentor’s code for the rotation solver to my project’s structure but it is yet to be tested.

I then refactored the same code to replace vectors by maps, as advised by my mentor, since it makes the code easier to read and maintain. I am also now using an enum object to maintain better the status of the program throughtout the app like, Planes Extracted, Planes Matched, and so on. I also started storing the back-projected point clouds within their corresponding CObservationTreeItem object for quicker access and display. This week I also officially joined my university as a graduate student.

Week 12:

July 30th to Aug 5th

I began this week by fixing bugs in the visualization of the results of the plane matching algorithm that caused the app to crash. I’d confused the map indices in a few places as I was doing the refactoring. I then began testing the rotation solver on these estimated matches, but the performance is poor. The error is always increasing. I put that on hold and continued with 2D line extraction and its visualization, for the second algorithm. This was straight forward except for the part where I had to use shared pointers to pass the annotated (with lines) images to the viewer to deal with unwanted memory release errors. I also worked on extracting their corresponding 3D lines and projective plane normals using the range image data, and also for their matching similar to the plane matching algorithm.

But the visualization of the 3D lines still needs more work. An earlier issue of not being able to switch between the algorithm configuration widgets easily was fixed by using Qt’s show/hide mechanism where I add both the widgets at first but choose to show/hide each one based on the selected option.

Week 13:

Aug 6th to Aug 13th

I started off the final week of coding, by getting the GUI ready for multi-sensor calibration. This meant being able to switch between the observations of multiple sensors in the viewer window, including the visualization of their extracted/matched features. This took a couple of days with a lot of testing/fixing to account for all the possible ways once could switch sensors, like before and after clicking on an observation, before and after running plane matching, and so on. Eduardo also contributed with some code for the translation solver and also fixed a bug in my plane match finding method.

While juggling with my university classes, I then introduced a downsampling factor to sample spaced out observation sets for the calibration, since nearby sets almost have the same features. I also worked on removing duplicates of segmented planes by using PbMap. Parameters for angular and distance uncertainties of the sensor poses was introduced, along with their corresponding GUI changes and restructuring. The user can now directly edit the sensor poses and uncertainties within the app and observe the changes.

I then proceeded to gather all the documentation for the final submission, which includes updating this post, with the intention of returning to the code again to fix the solver issues soon.

At the end of week 13 the app looks like this:

Update: I failed my final evaluation. So close, yet so far.